The Global Collections Initiative

The chief goal of the Global Collections Initiative is to expand electronic access to primary source documentation and data for scholarly research on parts of the world like the Middle East, sub-Saharan Africa, and South Asia, areas where the information landscape differs from that in the U.S. and Western Europe. A major focus of the initiative is access to materials existing only in digital form. Many of these materials are available openly on the web and on social media platforms; other digital- only materials are available through commercial databases and services. While established mechanisms and practices are in place for preserving tangible materials like print books and journals, for which relatively uniform and stable publishing and distribution models exist, production and supply chain realities in the digital realm present new challenges for libraries.

Jeffrey Garrett’s article, “Archiving the Latin American and Caribbean Web,” outlines the prevailing approach taken by major libraries to preserving open source web based materials. That approach involves harvesting html files and other digital content and metadata from websites, and storing that data independently in separate, controlled digital environments. In his article Garrett points out some of the limitations of that approach. The efforts are particularly daunting when considering preserving complex content like databases and video and highly dynamic news and social media sites and materials maintained behind paywalls and other barriers by commercial producers. If the primary goal is to ensure the long-term accessibility and integrity of web materials, approaches other than web harvesting might serve libraries and scholars better.

Given the overwhelming amount of open web content possibly relevant to area and international studies research, where does one begin? Historically research libraries have given priority to documentation and data useful to the widest range of humanities and social sciences: information essential to the understanding of major actors and forces in society, like news, the documents and archives of governments and NGOs, economic, financial and geospatial data, public opinion and population information. In their preservation triage libraries have also prioritized materials most likely to disappear absent their efforts.

New Threats and Challenges

Open source materials are particularly susceptible to corruption or loss through reliance upon highly fluid and complex technologies, loss or suppression by hostile political interests, and the growing privatization of data.

The technology threats are well known. The use of non-standard or proprietary formats and applications, unstable platforms and the potentially obsolescent media undermine the persistence and integrity of much open web content. Sites hosted by individuals and small, non-governmental organizations with limited means or technical sophistication are particularly vulnerable.

Recent political trends also pose threats. Censorship and suppression of news and other third-party documentation of illicit government and criminal activity have become more common with the rise of authoritarian regimes. As powers like China, Russia and Saudi Arabia gain economic and political influence, liberal norms for access to public interest information are likely to erode. Moreover, information that was once widely available to the public is rapidly being privatized. The new value of data to the corporate and national security sectors is driving monetization of global news as well as financial, legal, population, and environmental data, eroding the public information domain. According to the Journalism in the Americas blog, paywalls are becoming the norm for the major newspapers in Mexico, Brazil, Argentina and elsewhere in Latin America, as publishers turn to digital subscription rather than advertising as their primary source of revenue. Even data traditionally provided by governments, like census and statistical information and legislative proceedings, is now packaged and productized by commercial vendors, especially where poorly resourced governments are unable to equip their open platforms with the robust functionality modern researchers expect.

An important and particularly vulnerable category of web content is the product of a new phenomenon that has emerged in the information landscape: large web-based repositories of digital documentation. In 2010, with its release of Afghanistan and Iraq War video and text documents leaked by the U.S. military, WikiLeaks created a template that has since become widely adopted by grass-roots activists, journalists, policy researchers, and other civil society actors: collecting and curating critical and often highly sensitive evidence of human rights violations, government corruption, and environmental crime, and exposing that documentation on the web. The most widely known of such groups is the International Consortium of Investigative Journalists (ICIJ), which in 2016 broke the Panama Papers scandal using a trove of over 11 million pages of leaked legal and financial documents to reveal the offshore and illegal banking activities of thousands of world leaders, public figures and corporations.

Even more endangered is online documentation hosted by small news organizations operating in conflict zones and transitional societies. Zaman al-Wasl, an online news agency established in Homs, Syria, in 2005, has collected and exposed documents regarding the operations of ISIS, the Syrian government, and Western powers. In 2018 it published a leaked archive of 1.7 million documents on disappeared and victims of arbitrary arrest by the Assad regime, obtained from the Syrian intelligence service.

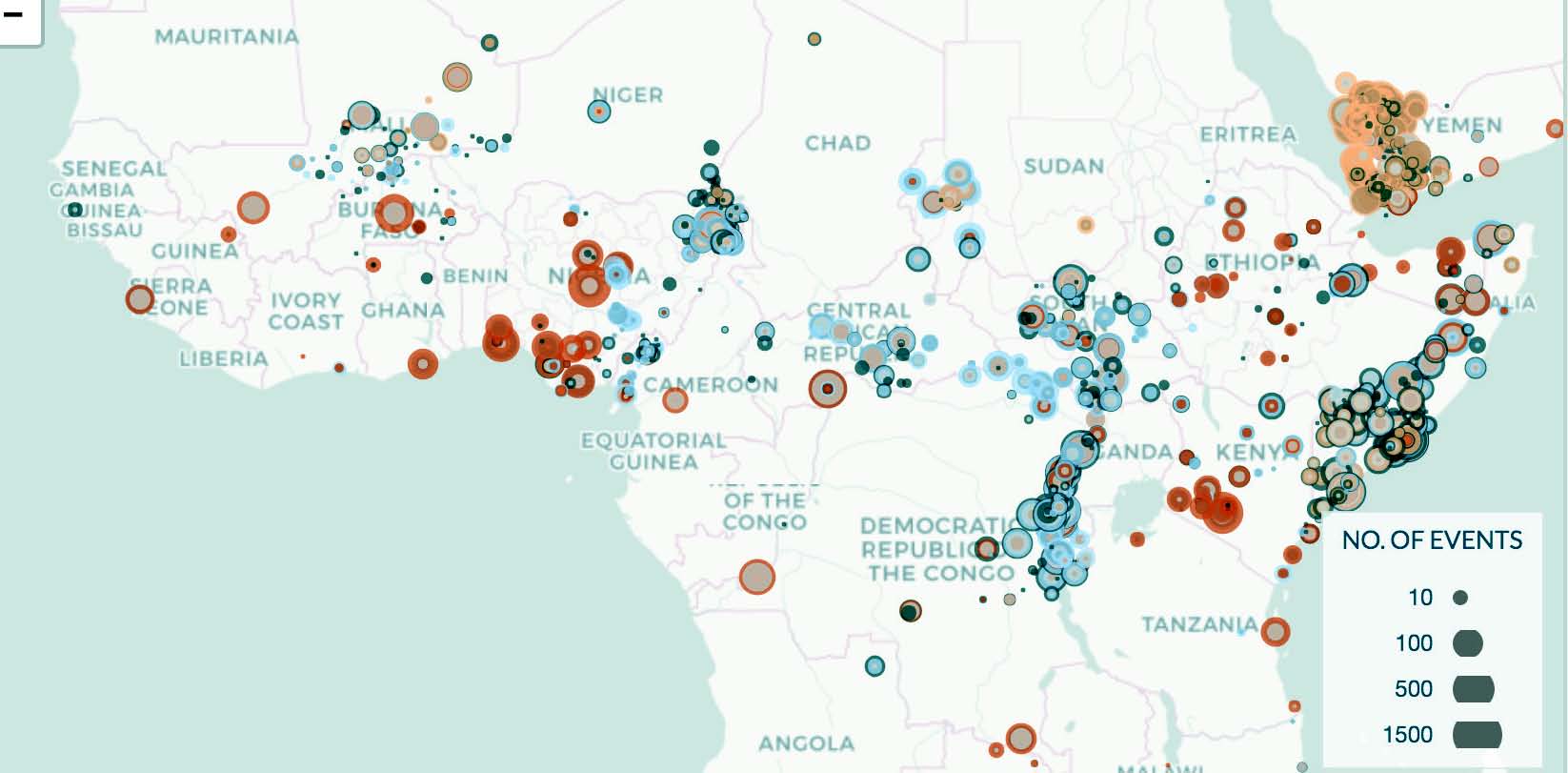

Other types of organizations harvest data on global conflicts, epidemics, trade, and other subjects from social media and the open web, and generate databases and visualizations that serve a variety of research communities, such as legal scholars, jurists, and policy researchers. Since 1997 the Armed Conflict Location & Event Data Project (ACLED), based at the University of Sussex, has been aggregating and collating data from online news sources on violence and conflict in sixty countries in Africa, South Asia, South East Asia, and the Middle East. ACLED provides informative visualization and analysis of the conflicts on an almost real-time basis.

Relevant to public policy in the present; such documentation will be vital to humanities and social science researchers in the long term. The organizations that preserve such data are not libraries or archives per se, but in effect have taken responsibility for stewardship of important evidence. While they have a vested interest in the survival of the data they gather, at least in the near term, many of them operate on marginal funding, deploy obsolescent technologies, and struggle to survive.

Some Alternative Approaches

All told, online documentation is being created and exposed in ways that elude capture through conventional web-archiving means. Harvesters cannot penetrate the commercial paywalls and other obstacles erected by activists to protect proprietary and sensitive content. And web-crawling using the standard harvesters proceeds too slowly to capture the dynamic visualizations and other resources like ACLED that pull data from social media and the live web in real time.

This suggests a need for libraries to work further upstream in the information “supply chain”. Support for new stewardship efforts like ICIJ and ACLED could go a long way toward ensuring the survival of critical web content. Rather than independently capturing and archiving their web content, it may be better to engage the producers in shaping a more serviceable and durable product. It is not clear how and where best to apply such support, as more research is necessary. Some common needs evident, however, include guidance on secure, non-proprietary platforms and hosting arrangements; best practices to promote discovery, interoperability, and scholarly mining of their content; and legal risk assessment and indemnification for dealing with sensitive materials.

Similarly, collective dealings with the commercial sources of digital data and documentation might mitigate some of the worst effects of paywalls and other barriers to scholarly access. Acting in unison, the academic library community could exploit the robust capabilities the news industry has built to manage and mine the enormous archives of electronic text, audio, moving image, and datasets they acquire and produce. The digital asset management systems (DAMs) used enterprisewide by organizations like Associated Press and El Pais have powerful repository features. Perhaps through the instrument of national site licenses, the news media organizations could be persuaded to harness those capabilities to provide enduring electronic access tailored to scholarly practice.

The web offers an almost infinite source of global data and documentation relevant to humanities and social science research. This apparent surfeit makes the task of finding “the signal in the noise” at once difficult and imperative. Therefore libraries should invest locally and collectively in building the analytical capabilities needed to locate value and redundancy in commercial databases and open access resources alike. Landscape analysis, and auditing of the major data producers and repositories could provide intelligence for decision-making. As the onus of preservation falls on more and more organizations outside the traditional library orbit, transparency will also become more crucial. To identify the high-value targets and priorities, libraries will need a stronger base of knowledge than exists currently.